When teams start using AI for content, the pattern is predictable. Someone decides an LLM can produce a passable draft in minutes. Word spreads. Soon, people across the organization are prompting their way through content tasks, each with their own approach, their own shortcuts, their own assumptions about what “good enough” means.

This feels like efficiency, but in my experience, it’s not.

Why “just prompt it” breaks at scale

Call it prompt-and-pray: ad hoc AI usage that treats prompting as a stable input when it’s anything but.

The same task, handled by different people with different prompting habits, produces different results. The same person prompting the same task on different days can also produce different results.

Multiply that variability across a team, across weeks, across dozens of content pieces, and you get compounding inconsistency that damages brand coherence and forces downstream corrections that wipe out the efficiency gains you thought you’d achieved.

A Wharton study on prompt engineering confirms what teams discover the hard way: prompt and evaluation variations cause substantial variability in LLM performance. Small changes in structure, context, or guidelines shift outcomes in ways that are difficult to predict and harder to control without standardization and evaluation baked in.

We’ve seen it in review cycles. Drafts seem fine — even excellent — at first glance, then fall apart under scrutiny. They miss the voice, repeat ideas, shift abruptly, and drift from the brief. They contain plausible-sounding claims that don’t hold up. Evaluators catch problems late, if at all, and quality swings wildly across pieces, writers, and time.

The fix is operational: guardrails, defined roles, structured inputs.

A content production workflow is a system, not a writing trick

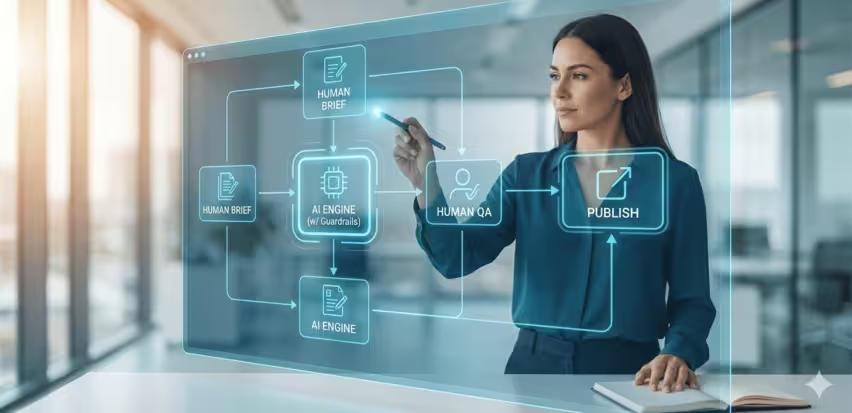

When a content production workflow works at scale, it’s an end-to-end operational system:

- Inputs: briefs, examples, constraints, and context structured specifically for AI

- Roles: who creates, who drafts, who evaluates, who approves

- Workflow stages: planning through production through publishing

- QA and validation: defined standards and consistency checks, separate from editing

- Measurement: feedback loops connecting output to outcomes

AI changes what each of these components requires in practice.

For example, using a human brief as an AI brief is one of the most predictable failure points I see. Human briefs assume context, allow interpretation, rely on the writer’s judgment to fill gaps. AI inputs need explicit structure: clear constraints, relevant examples, specific instructions for what to include and avoid.

This mismatch between human workflows and AI requirements isn’t an edge case. It’s a systemic reason many organizations struggle to move from experimentation to scale.

McKinsey’s 2025 AI survey illustrates the gap: 88% of respondents say their organizations regularly use AI in at least one business function, but only about one-third have begun scaling at the enterprise level. Organizations that redesign workflows and define when human validation is required tend to realize more meaningful value.

At scale, a content production workflow needs guardrails that answer specific questions:

- Who decides what gets drafted?

- What gets checked before it moves forward?

- What can’t ship without sign-off?

- How are editing and QA defined and separated?

One of the most common guardrail failures shows up when roles blur or collapse. Editing improves a piece. QA enforces standards. Conflating them causes problems at scale.

Efficiency only counts if quality holds.

Where AI adds value beyond drafting

Teams that focus AI exclusively on drafting are underutilizing the capability and concentrating risk. Draft quality may improve, but the workflow around it stays fragile and inefficient.

AI can reduce failure risk across the workflow:

- Article-level planning: SERP analysis, content gaps, and brief inputs

- Editing and optimization support: clarity, concision, and SEO alignment

- Visual opportunities: where images, diagrams, or design elements would strengthen content

- Design: visual briefs, layout guidance, and asset requirements

- Publishing requirements: metadata needs, schema requirements, linking direction, and distribution tasks

This reduces downstream rework and tightens handoffs. When AI assists with optimization during production, fewer issues surface in final review. When AI maps publishing requirements early, fewer tasks get missed at launch.

Apply AI where it strengthens clarity, completeness, and publishing readiness.

The biggest failures rarely happen in the first draft. They happen in the gaps between stages, where handoffs break down, assumptions go unverified, and problems compound before anyone catches them. Applying AI only at the drafting stage leaves those gaps untouched.

The real failure points between draft and publish

I’ve watched this scenario play out a few times:

An agency team shifts from human-only drafting to AI-assisted production. They keep their existing brief process. Account managers use those briefs to generate drafts in AI. Proofreaders are asked to humanize the output. QA is deprioritized under the assumption that editing covers it and the AI-generated copy looks clean.

The end client notices a quality shift and identifiable AI-generated content. Trust erodes. Efficiency gains evaporate into rework and relationship repair.

This is the cascade effect: each broken link compounds the next. What looked like reasonable decisions created systemic failure:

- Brief mismatch: The brief was written for a human writer, not for AI. It assumed interpretation, judgment, and context-filling that the system couldn’t reliably provide.

- Role mismatch: Drafting was handled by people without training in AI operation or content evaluation. Prompting replaced production discipline.

- Unstandardized prompting: Without shared prompts or constraints, output quality varied based on who was prompting and how.

- Misapplied humanization: Proofreaders were asked to “humanize” AI-generated copy. Lacking a defined process for evaluation, review was reduced to surface edits and basic fact checks.

- QA bypass: No one validated the output against defined standards before publication.

When AI use becomes identifiable to readers, the risk extends beyond internal quality control. Research from the Nuremberg Institute for Market Decisions found that identical ads labeled “AI-generated” were evaluated more negatively than when presented as “human-made.” Effects vary by category and context, but the directional finding matters.

Quality failures that make AI use visible don’t stay contained to production. They surface in trust, perception, and brand impact.

Standardize what must be consistent, flex what can vary

The rule: wherever rigid consistency is required, standardize to remove ambiguity.

Voice and structure are the clearest examples. If your brand voice must be recognizable across hundreds of pieces produced by multiple people using AI, any ambiguity in how that voice is defined, prompted, and evaluated will show up as drift in the output.

Certain content types also demand structural consistency. Location pages are a common case. They’re built to guide readers through specific information in a deliberate order. Without a system that keeps the LLMs aligned to that structure, pages will vary, and performance will vary with them.

Ambiguity is the enemy. In workflows, it looks like:

- Briefs that say “make it engaging” without defining what that means

- Prompts that vary based on who’s writing them

- Review criteria that live in someone’s head rather than a document

- Roles where no one owns specific quality dimensions

- Handoffs without defined completion criteria or acceptance benchmarks

- Outlines that specify structure but not brand positions, priorities, or opinions

Where rigid consistency isn’t required, let it flex. Creative approaches, illustrative examples, and surface-level details can vary as long as core constraints are honored. Over-standardizing these elements slows teams without improving outcomes. Under-standardizing what actually matters produces inconsistency that only shows up in review.

Identify where consistency matters most — voice, accuracy, compliance, brand alignment — and remove ambiguity there first.

Humans in the loop, but with different jobs

AI-assisted content doesn’t remove humans from the workflow. It changes what they do.

Humans stay in the loop across practically every stage. But one person with strong evaluation skills and AI training can cover ground that used to require a team. Traditional structures might separate strategist, writer, and editor into distinct positions. In an AI-assisted workflow, a capable evaluator can handle all three: defining the brief, overseeing AI drafting, evaluating output against standards.

This is role compression. It works when evaluation capability is strong and the person’s been trained to operate AI intentionally. It fails when organizations assume anyone can prompt effectively or that AI outputs don’t need knowledgeable review. That assumption is reinforced by automation bias.

BCG’s research on human oversight highlights the tendency to over-trust automated outputs. Without designed oversight and escalation paths, review becomes a rubber stamp. McKinsey’s 2025 survey found that 51% of respondents from organizations using AI report at least one negative consequence, with nearly one-third reporting consequences from AI inaccuracy.

The non-negotiable: don’t let AI replace human knowledge and judgment. AI generates outputs that sound confident and polished while being factually wrong, strategically misaligned, or tonally off. “Looks good” isn’t the same as “is good.”

Oversight design means defining specifics:

- What standards define “good enough” for this content type

- What failure modes the evaluator is responsible for catching

- Where human judgment overrides AI output rather than refining it

- When issues escalate instead of getting edited around

AI operation is a skill. Role compression only works when that skill is trained.

“Isn’t strong prompting enough?”

Here’s a fair counterargument: With capable models and strong prompting, you can produce high-quality content consistently. Workflow layers, QA, governance, extra roles mainly slow teams down and eat into efficiency gains.

It’s true in a narrow case. A small team with strong evaluators and tight constraints can run lighter. When the person prompting also knows the subject, understands the audience, and catches problems immediately, the feedback loop is fast and failure modes are limited.

The risk emerges at scale. This argument existed before AI: you don’t need all these people, you don’t need all this process, a capable individual can handle it.

Sometimes true.

But failure points showed up when that individual was unavailable, when work scaled beyond what one person could evaluate, when knowledge gaps went unnoticed until after publication.

With AI, those risks compound:

- Outputs appear confident while masking factual or strategic errors

- Voice drift becomes subtle and harder to detect

- Plausible content passes review while missing intent or requirements

Complete faith in LLM decisions without designed evaluation means issues surface after execution: in client feedback, performance metrics, brand perception.

The remedy isn’t rejecting AI efficiency. It’s designing the system so efficiency doesn’t come at the expense of outcomes. QA, evaluation checkpoints, and defined accountability let teams push efficiency further while maintaining quality control.

What to do next: the minimum viable workflow mindset

Scalable AI content production workflows depend on operational design. Teams that succeed treat production as a system: defined roles, structured inputs, measurement loops connecting output to outcomes.

If you’re formalizing an AI content workflow:

- Eliminate ambiguity where consistency is required, especially voice, accuracy, and brand standards

- Define clear roles and decision ownership across the workflow

- Invest in structured, reusable prompt systems that encode brand and content standards

- Train the people responsible for operating and evaluating AI outputs

- When optimizing for efficiency, evaluate how changes affect output quality and downstream risk

You can maximize efficiency while protecting outcomes. The goal isn’t process for its own sake. It’s identifying where failures emerge and designing controls that prevent them.

Identify where consistency matters most to your brand and audience, and standardize there first. That’s where operational design pays off fastest.